Giving Robots a Voice: Humans-in-the-Loop Voice Creation and open-ended Labeling

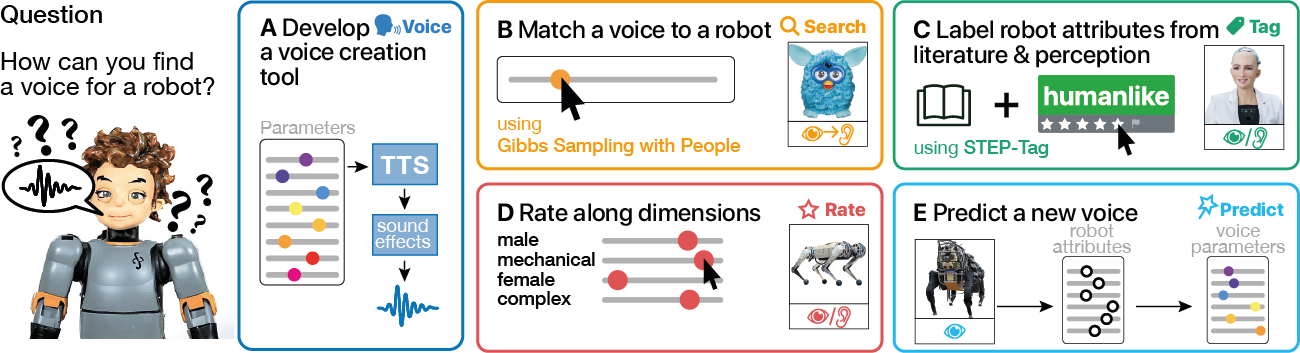

Speech is a natural interface for humans to interact with robots. Yet, aligning a robot's voice to its appearance is challenging due to the rich vocabulary of both modalities. Previous research has explored a few labels to describe robots and tested them on a limited number of robots and existing voices. Here, we develop a robot-voice creation tool followed by large-scale behavioral human experiments (N=1,714). First, participants collectively tune robotic voices to match 175 robot images using an adaptive humans-in-the-loop pipeline. Then, participants describe their impression of the robot or their matched voice using another humans-in-the-loop paradigm for open-ended labeling. The elicited taxonomy is then used to rate robot attributes and to predict the best voice for an unseen robot. We offer a web interface to aid engineers in customizing robot voices, demonstrating the synergy between cognitive science and machine learning for engineering tools.